Published on: August 12, 2022

Introduction

Extended reality (XR) headsets aim at tricking us into believing that we are immersed in either a virtual world or a blend of the real and digital environment. They do so by displaying high-fidelity images in front of our eyes. These images are updated in quick succession to reflect any changes in the digital realm and the user’s surroundings. However, powerful hardware is needed in order to render the video stream. Rendering (generating a sequence of images on a computer) becomes more difficult with increased resolution and higher image refresh rates. There are several different approaches used today to tackle video rendering and video stream transmission. This post briefly describes them, takes a closer look at wireless technology’s role in the XR video stream’s journey from rendering machine to display device, and highlights the main challenges encountered by today’s wireless headsets.

XR video rendering and transmission

Video displayed on an XR headset has to conform with the following requirements: 1) there should not be any perceivable latency and 2) it has to look realistic. The two are mutually exclusive when it comes to video rendering. To illustrate, consider a headset capable of displaying 60 FPS (frames per second) at a 1400×1400 px resolution. The headset has to render and display almost 120 million pixels per second. To reduce latency, the manufacturer can upgrade the device’s display refresh rate to 120 FPS. But now the device would have to render twice the amount of pixels per second. Then, the headset manufacturer has to either reduce the video resolution by 50% or upgrade the hardware components, responsible for video rendering. It is this aspiration towards higher refresh rates and enhanced resolution, that is driving video rendering away from the headset and onto external devices.

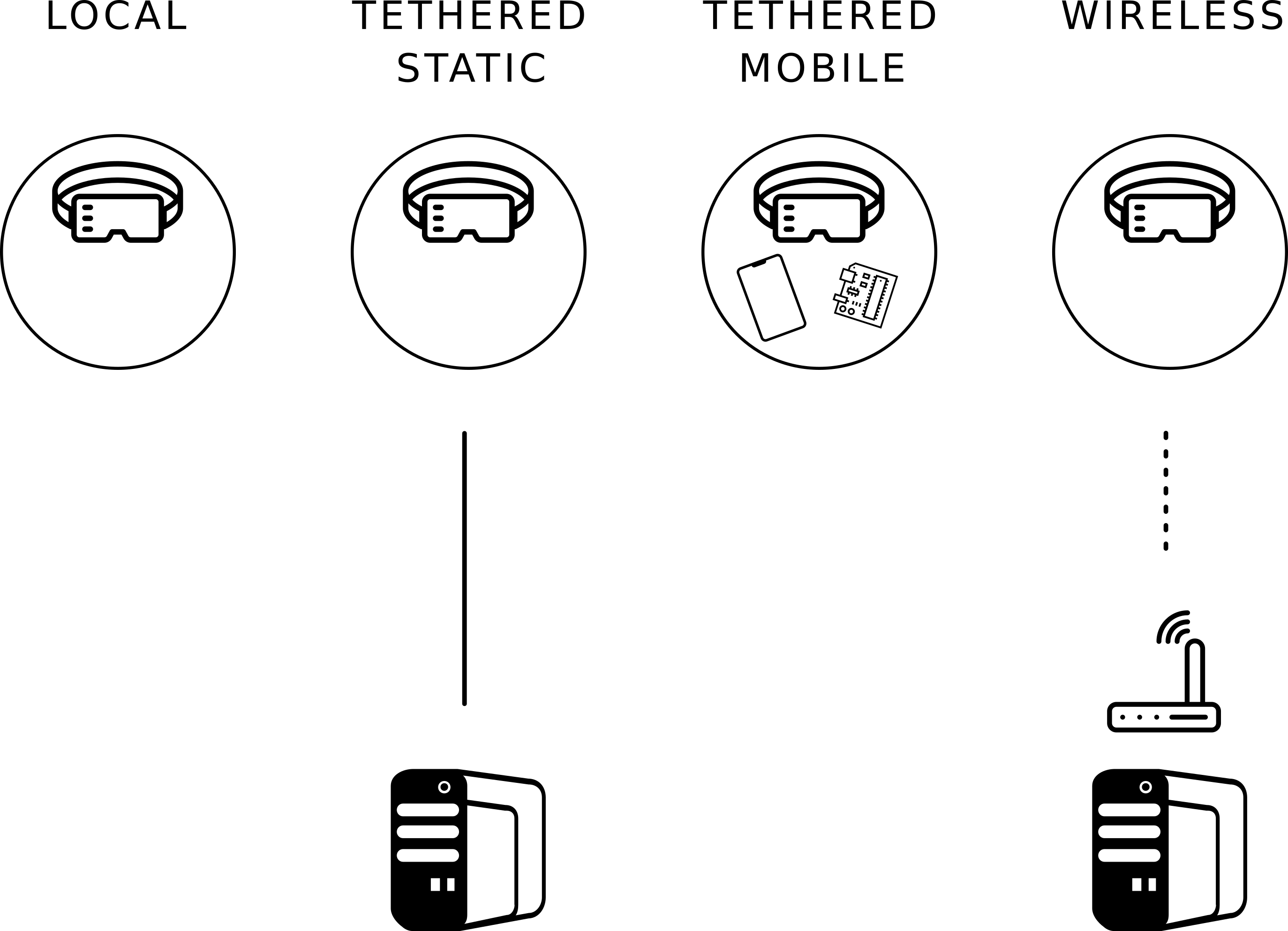

Conducting video rendering on an external device, such as a potent desktop computer, can enable increased fidelity video rendering. However, this requires a data link between the two devices. Depending on where rendering takes place and how the data is transferred, I have grouped the approaches into four categories as illustrated in Figure 1 and described below.

Figure 1: Comparison of the 4 approaches to XR video rendering and transmission. Devices within the circle are worn or carried by the user.

Local: Video rendering takes place on the XR headset. Thus, it can work independently, but with limited processing capabilities. Examples include augmented reality (AR) headsets such as Microsoft Hololens and most devices manufactured by Vuzix.

Tethered static: The headset is connected via cable to an external device, typically a high-performance computer, where rendering takes place. There is negligible additional latency, provided the headset connects directly to the computer’s GPU. The approach is preferred by VR-first headsets (limited AR capabilities), such as those in Varjo’s product lineup.

Tethered mobile: The headset relies on remote rendering on a smaller, more portable device. The reduced size and a narrower choice of streaming protocols (e.g. only USB) both decrease performance, compared to the tethered static case. Examples mostly include AR headsets, which often require more freedom of movement for the user. The rendering can take place on anything from a laptop or smartphone (Lenovo ThinkReality A3) to a dedicated mini processing node (Magic Leap 1 and Nreal Light). Moreover, rendering can also take place on a backpack-like computer (e.g. HP VR Backpack).

Wireless: A headset with wireless connectivity can rely on high-fidelity video rendering on a static device, such as a computer, without limiting user mobility. The benefits, resulting trade-offs, and available wireless headsets today are further discussed in the next section.

Note, that a single headset might support several different rendering and transmission modes, making the device more versatile. For example, an architect might use the device in tethered static mode during the design process, and in local mode when they visit a client to further discuss the project.

Wireless streaming of XR video

Figure 2 shows a common problem of XR headsets relying on tethered static video rendering and transmission. The user has to rely on another person to manage the headset’s cable – keeping both the user and other people in their environment from getting entangled.

Figure 2: User wearing a tethered static XR headset. [1]

Streaming over a wireless connection alleviates the need for any cable connections. Whether alone in the room or in a lively environment, the XR user has total freedom of movement. Moving the rendering hardware to an external device also means that the headset no longer has to accommodate them, making it smaller, less bulky, lighter, and in general more appealing to the consumer. Furthermore, reducing the amount of specialised hardware in the headset can decrease its price tag. Yet, wireless streaming does introduce additional components that can degrade overall performance.

Two widespread XR headsets stand out for their wireless streaming support, the Oculus Quest 2, with Wi-Fi 6 (IEEE 802.11ax, 2.4 and 5 GHz) support, and the HTC Vive Pro 2, relying on a custom 60 GHz transceiver (the same frequency as millimeter-Wave Wi-Fi, also called WiGig, or IEEE 802.11ad/ay).

Let us now summarize the main problems associated with wireless networks for XR use cases.

Latency. Packets are often delayed before transmission, even in single-user scenarios. This might be due to transmission only taking place after a given amount of data has been accumulated (aggregation), consuming transmission time for advertising the wireless network to potential users, or other.

Data rate. Transmitting a video stream with minimal delay requires almost zero-latency video compression. This, however, results in loosely-compressed video that requires a higher data rate (compared to watching static content, e.g. from Youtube or Netflix).

Coverage. An access point (Wi-Fi) or base station (4G) is limited to a given area, sometimes a single room. Commuting between them triggers automatic handovers, associating the headset with a new access point or base station. However, such switches cause visible latency and can result in brief data loss.

Congestion. Multiple users means there are less resources per user. Even networks offering fine-grained time, frequency, and space division will eventually cause lower resolution and/or higher latency.

Outage due to shadowing. A wireless signal is made up of electromagnetic waves, just like visible light is. If one crosses the path between their eyes and a light source, the light is attenuated. A similar effect takes place at 60 GHz and gradually fades towards lower frequencies. Thus, communication at high frequencies becomes extremely hard.

Outage due to misalignment. Antennas at higher frequencies are typically smaller, and we typically use a larger amount of them to cover the same effective area. Like ocean waves passing through openings in a barricade, this results in a constructive and destructive interference pattern. Luckily, the pattern can be altered and pointed in the direction of the communicating device (also called beamforming). But XR users are known for frequent and fast (past 180°/s) head movements. Therefore, insufficient beam scanning results in frequent outages.

Conclusion

Consumer appeal (form and price), immersion fidelity, and freedom of movement are the three pillars that today’s consumer-grade XR headsets are trying to jointly address. Remote rendering and wireless streaming offer a viable solution that several manufacturers have started pursuing. However, today’s wireless standards are not yet a viable alternative to a tethered connection for streaming XR video. Follow the MINTS website and catch a glimpse of how we can solve some of these problems in the future.

If you were able to stick until the end and can’t wait for more content and you also want to know about us and our projects, you can always follow our social media channels.

Citations

[1] Photo by Sophia Sideri on Unsplash